| Day | Section | Topic |

|---|---|---|

| Mon, Jan 12 | 1.2 | Data tables, variables, and individuals |

| Wed, Jan 14 | 2.1.3 | Histograms & skew |

| Fri, Jan 16 | 2.1.5 | Boxplots |

Today we covered data tables, individuals, and variables. We also talked about the difference between categorical and quantitative variables.

In the data table in the example above, who or what are the individuals? What are the variables and which are quantitative and which are categorical?

If we want to compare states to see which are safer, why is it better to compare the rates instead of the total fatalities?

What is wrong with this student’s answer to the previous question?

Rates are better because they are more precise and easier to understand.

I like this incorrect answer because it is a perfect example of bullshit. This student doesn’t know the answer so they are trying to write something that sounds good and earns partial credit. Try to avoid writing bullshit. If you catch yourself writing B.S. on one of my quizzes or tests, then you can be sure that you a missing a really simple idea and you should see if you can figure out what it is.

We talked briefly about making bar charts for categorical data.

Then we introduced stem & leaf plots (stemplots) and histograms for quantitative data. We started by making a stemplot and a histogram for the weights of the students in the class. We also talked about how to tell if data is skewed left or skewed right.

Can you think of a distribution that is skewed left?

Why isn’t this bar graph from the book a histogram?

Then we did this workshop:

We finished by reviewing the mean and the median.

The median of numbers is located at position .

The median is not affected by skew, but the average is pulled in the direction of the skew. So the average will be bigger than the median when the data is skewed right, and smaller when the data is skewed left.

We introduced the five number summary and box-and-whisker plots (boxplots). We also talked about the interquartile range (IQR) and how to use the rule to determine if data is an outlier.

We started with this simple example:

An 8 man crew team actually includes 9 men, the 8 rowers and one coxswain. Suppose the weights (in pounds) of the 9 men on a team are as follows:

120 180 185 200 210 210 215 215 215Find the 5-number summary and draw a box-and-whisker plot for this data. Is the coxswain who weighs 120 lbs. an outlier?

| Day | Section | Topic |

|---|---|---|

| Mon, Jan 19 | Martin Luther King day - no class | |

| Wed, Jan 21 | 2.1.4 | Standard deviation |

| Fri, Jan 23 | 4.1 | Normal distribution |

Today we talked about robust statistics such as the median and IQR that are not affected by outliers and skew. We also introduced the standard deviation. We did this one example of a standard deviation calculation by hand, but you won’t ever have to do that again in this class.

11 students just completed a nursing program. Here is the number of years it took each student to complete the program. Find the standard deviation of these numbers.

3 3 3 3 4 4 4 4 5 5 6From now on we will just use software to find standard deviation. In

a spreadsheet (Excel or Google Sheets) you can use the

=STDEV() function.

Which of the following data sets has the largest standard deviation?

We finished by looking at some examples of histograms that have a shape that looks roughly like a bell. This is a very common pattern in nature that is called the normal distribution.

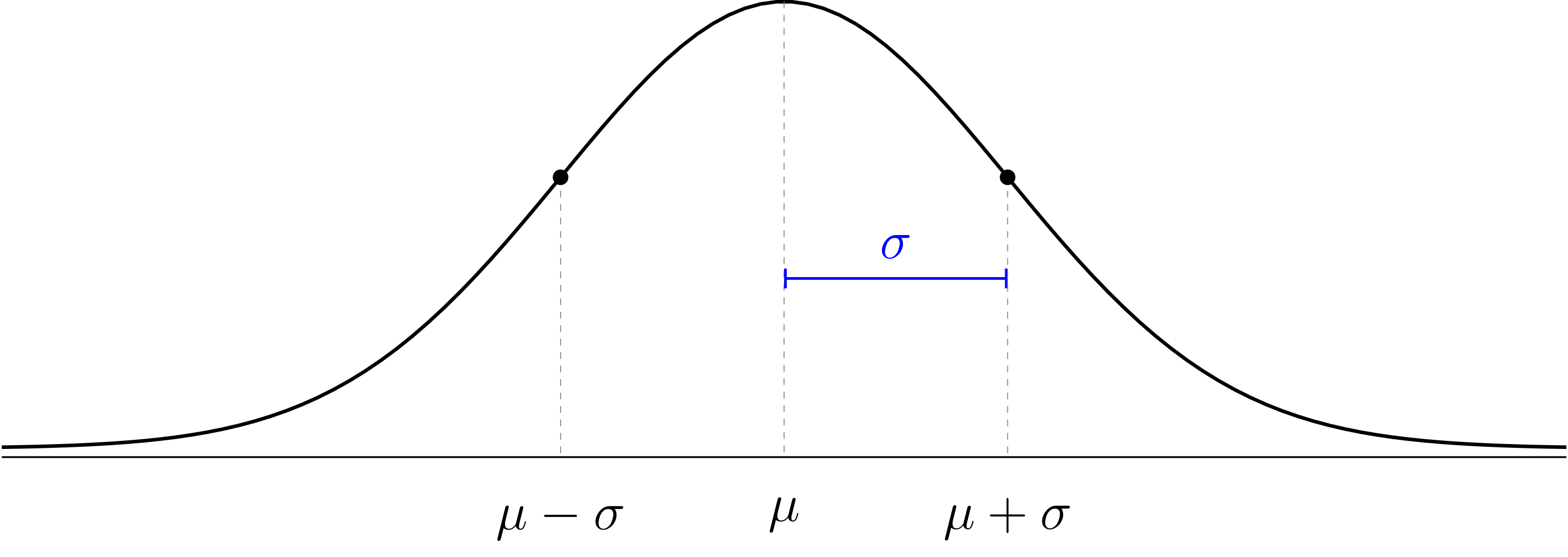

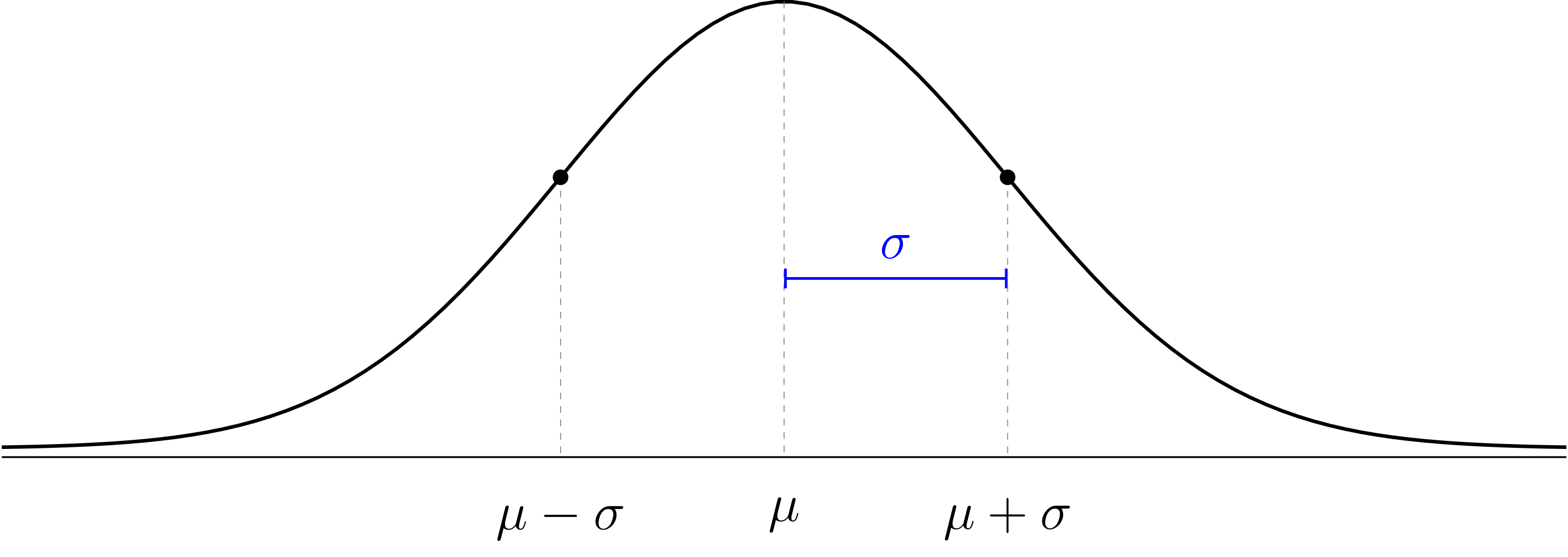

The normal distribution is a mathematical model for data with a histogram that is shaped like a bell. The model has the following features:

The normal distribution is a theoretical model that doesn’t have to perfectly match the data to be useful. We use Greek letters and for the theoretical mean and standard deviation of the normal distribution to distinguish them from the sample mean and standard deviation of our data which probably won’t follow the theoretical model perfectly.

We talked about z-values and the 68-95-99.7 rule.

We also did these exercises before the workshop.

In 2020, Farmville got 61 inches of rain total (making 2020 the second wettest year on record). How many standard deviations is this above average?

The average high temperature in Anchorage, AK in January is 21 degrees Fahrenheit, with standard deviation 10. The average high temperature in Honolulu, HI in January is 80°F with σ = 8°F. In which city would it be more unusual to have a high temperature of 57°F in January?

| Day | Section | Topic |

|---|---|---|

| Mon, Jan 26 | 4.1.5 | No class (snow day) |

| Wed, Jan 28 | 4.1.4 | Normal distribution computations |

| Fri, Jan 30 | 2.1, 8.1 | Scatterplots and correlation |

We introduced how to find percentages on a normal distribution for locations that aren’t exactly one, two, or three standard deviations away from the mean. I strongly recommend downloading the Probability Distributions app (android version, iOS version) for your phone.

We talked about how to use the app to solve the following types of problem:

(Percent below) SAT verbal scores are roughly normally distributed with mean μ = 500, and σ = 100. Estimate the percentile of a student with a 560 verbal score.

(Percent above) What percent of students get above a 560 verbal score on the SATs?

(Percent between) What percent of men are between 6 and 6 and a half feet tall?

(Percent to locations) What is the height of a man in the 25th percentile?

We also talked about the shorthand notation which literally means “the probability that the outcome X is between 72 and 78”. Then we did this workshop.

We introduced scatterplots and correlation coefficients with these examples:

Important concept: correlation does not change if you change the units or apply a simple linear transformation to the axes. Correlation just measures the strength of the linear trend in the scatterplot.

Another thing to know about the correlation coefficient is that it only measures the strength of a linear trend. The correlation coefficient is not as useful when a scatterplot has a clearly visible nonlinear trend.

After we finished that, we talked about explanatory & response variables (see section 1.2.4 in the book).

An article in the journal Pediatrics found an association between the amount of acetaminophen (Tylenol) taken by pregnant mothers and ADHD symptoms in their children later in life. What are the variables? Which is explanatory and which is response?

Does your favorite team have a home field advantage? If you wanted to answer this question, you could track the following two variables for each game your team plays: Did your team win or lose, and was it a home game or away. Which of these variables is explanatory and which is response?

| Day | Section | Topic |

|---|---|---|

| Mon, Feb 2 | 8.2 | Least squares regression introduction |

| Wed, Feb 4 | 8.2 | Least squares regression practice |

| Fri, Feb 6 | 1.3 | Sampling: populations and samples |

We talked about least squares regression. The least squares regression line has these features:

You won’t have to calculate the correlation or the standard deviations and , but you might have to use them to find the formula for a regression line.

We looked at these examples:

Keep in mind that regression lines have two important applications.

It is important to be able to describe the units of the slope.

What are the units of the slope of the regression line for predicting BAC from the number of beers someone drinks?

What are the units of the slope for predicting someone’s weight from their height?

We also introduced the following concepts.

The coefficient of determination represents the proportion of the variability of the -values that follows the trend line. The remaining represents the proportion of the variability that is above and below the trend line.

Regression to the mean. Extreme -values tend to have less extreme predicted -values in a least squares regression model.

Before the workshop, we started with this warm-up exercise.

A sample of 20 college students looked at the relationship between foot print length (cm) and height (in). The sample had the following statistics:

We talked about the difference between samples and populations. The central problem of statistics is to use sample statistics to answer questions about population parameters.

We looked at an example of sampling from the Gettysburg address, and we talked about the central problem of statistics: How can you answer questions about the population using samples?

The reason this is hard is because sample statistics usually don’t match the true population parameter. There are two reasons why:

We looked at this case study:

Important Concepts

Bigger samples have less random error.

Bigger samples don’t reduce bias.

The only sure way to avoid bias is a simple random sample.

| Day | Section | Topic |

|---|---|---|

| Mon, Feb 9 | 1.3 | Bias versus random error |

| Wed, Feb 11 | Review | |

| Fri, Feb 13 | Midterm 1 |

We did this workshop.

| Day | Section | Topic |

|---|---|---|

| Mon, Feb 16 | 1.4 | Randomized controlled experiments |

| Wed, Feb 18 | 3.1 | Defining probability |

| Fri, Feb 20 | 3.1 | Multiplication and addition rules |

| Day | Section | Topic |

|---|---|---|

| Mon, Feb 23 | 3.4 | Weighted averages & expected value |

| Wed, Feb 25 | 3.4 | Random variables |

| Fri, Feb 27 | 7.1 | Sampling distributions |

| Day | Section | Topic |

|---|---|---|

| Mon, Mar 2 | 5.1 | Sampling distributions for proportions |

| Wed, Mar 4 | 5.2 | Confidence intervals for a proportion |

| Fri, Mar 6 | 5.2 | Confidence intervals for a proportion - con’d |

| Day | Section | Topic |

|---|---|---|

| Mon, Mar 16 | 5.3 | Hypothesis testing for a proportion |

| Wed, Mar 18 | Review | |

| Fri, Mar 20 | Midterm 2 |

| Day | Section | Topic |

|---|---|---|

| Mon, Mar 23 | 6.1 | Inference for a single proportion |

| Wed, Mar 25 | 5.3.3 | Decision errors |

| Fri, Mar 27 | 6.2 | Difference of two proportions (hypothesis tests) |

| Day | Section | Topic |

|---|---|---|

| Mon, Mar 30 | 6.2.3 | Difference of two proportions (confidence intervals) |

| Wed, Apr 1 | 7.1 | Introducing the t-distribution |

| Fri, Apr 3 | 7.1.4 | One sample t-confidence intervals |

| Day | Section | Topic |

|---|---|---|

| Mon, Apr 6 | 7.2 | Paired data |

| Wed, Apr 8 | 7.3 | Difference of two means |

| Fri, Apr 10 | 7.3 | Difference of two means - con’d |

| Day | Section | Topic |

|---|---|---|

| Mon, Apr 13 | 7.4 | Statistical power |

| Wed, Apr 15 | Review | |

| Fri, Apr 17 | Midterm 3 |

| Day | Section | Topic |

|---|---|---|

| Mon, Apr 20 | 6.3 | Chi-squared statistic |

| Wed, Apr 22 | 6.4 | Testing association with chi-squared |

| Fri, Apr 24 | Choosing the right technique | |

| Mon, Apr 27 | Last day, recap & review |